The 9 Cost Factors

A comprehensive framework for evaluating the total, long-term economic impact of programming language choices across nine critical cost dimensions.

How a teenage printer’s writing exercise reverse engineered the timeless principles of learning.

The core algorithm that powers today’s most advanced artificial intelligence wasn’t born in Silicon Valley or has anything to do with computers. It was engineered in the dusty, ink stained backroom of a Boston print shop, nearly 300 years ago, by a teenager who was tired of being told he wasn’t a good writer.

Long before he was a world famous statesman and inventor, Benjamin Franklin was an ambitious, self taught apprentice with a chip on his shoulder. Working for his older brother, he devoured books and secretly submitted anonymous essays to his brother’s newspaper, desperate to succeed in this world of words. But he had a problem. While his ideas were sharp, his prose was clumsy.

His own father, Josiah, delivered a blunt critique: Ben’s writing lacked “elegance of expression, method and perspicuity.”1 For an aspiring intellectual, this was a devastating diagnosis. It confirmed his fear that he simply didn’t have the innate ‘gift’ for writing.

Instead of giving up, Franklin did something extraordinary. He rejected the idea of ‘innate talent’ and treated his flawed writing not as a personal failure, but as an engineering problem. He asked himself: Is there a system, an algorithm, that can build the skill of writing from the ground up? In effect, he decided to debug his own brain.

What Franklin developed wasn’t just a writing exercise, it was something far more profound. His process reveals something fundamental about how intelligence, artificial or human, actually improves. When we examine his method through a modern lens, we discover he had unknowingly architected the same learning principles that power today’s AI.

Franklin’s method can be seen as a kind of human powered, conceptual gradient descent, where conscious insight replaces calculus to minimize the ’error’ between his writing and his goal. By deconstructing his 300 year old protocol, we can uncover a powerful blueprint for how anyone can master a complex skill.

Franklin’s breakthrough wasn’t just in learning to write better, it was in systematizing the process of improvement itself. He created a feedback loop that could be applied to any skill, making excellence reproducible rather than accidental.

Franklin’s methodical approach to mastering writing

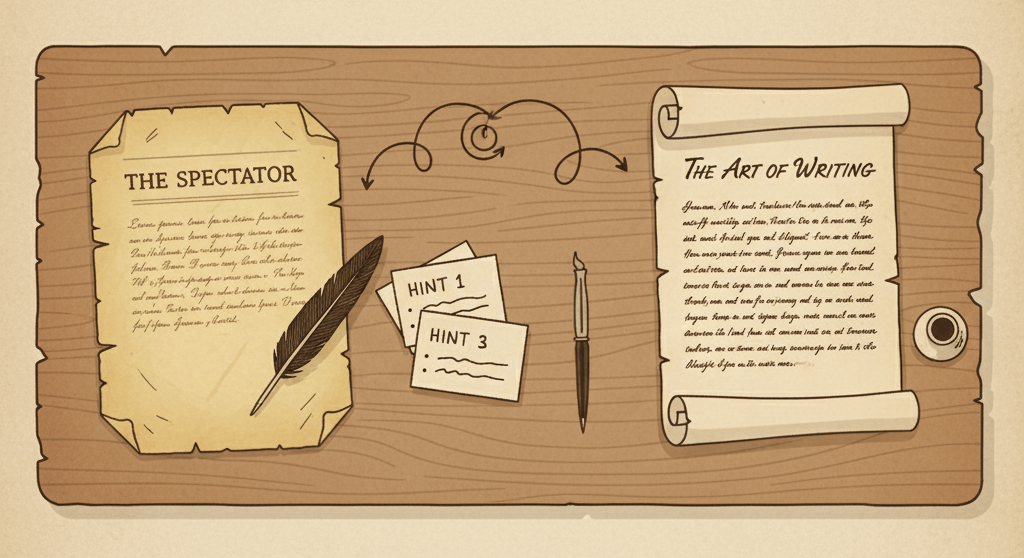

Franklin chose articles from The Spectator, a respected British periodical, as his “training data” and ran them through a repeatable loop that tightened his prose with every pass.

Compress the source to its signal

Generate from compressed understanding

Compare against the ground truth

Adjust the mental model

Franklin’s protocol is a striking parallel for a modern machine learning training loop:

| Benjamin Franklin’s Method | Modern Machine Learning Equivalent |

|---|---|

| Selects high quality articles from The Spectator | Data Collection: A curated, high quality dataset is assembled for training |

| Reconstructs articles from “short hints” | Forward Pass: The model makes a prediction based on an input |

| Compares his version to the original to find “faults” | Loss Function: The model’s prediction is compared to the correct output to calculate an error |

| Meticulously corrects his prose based on the errors found | Backpropagation & Gradient Descent: The error is propagated backward to adjust the model’s internal weights, minimizing future errors |

Modern applications across domains

Anyone can use the Franklin Protocol to master a skill today, from coding to cooking to playing a musical instrument. The key is to stop passively consuming information and start building your own active learning loop.

The beauty of Franklin’s method lies in its universality. Whether you’re debugging code, perfecting a recipe, or learning a musical piece, the same principles apply: compress the knowledge, reconstruct from memory, compare against excellence, and iterate based on the gaps you discover.

Every skill has a “ground truth”, a gold standard you can learn from. Franklin had The Spectator. For you, it might be an exemplar that proves what’s possible.

The goal is to find an authoritative model you can meaningfully compare your own work against.

I used a very similar protocol when I was learning the Go programming language, treating the effort like a Code Kata. After learning the basics, I designed focused challenges such as reimplementing functions from the strings package.

Attempt the skill from your current understanding

strings.Split or strings.Join from scratch, based on my current knowledgeCompare against a trusted ground truth

Capture precise deltas to correct

This final step is what separates simple practice from true mastery. After refactoring based on insights, ask the master’s question: “What pattern did I miss that an expert sees instantly?” This meta analysis, thinking about your thinking, accelerates the internalization of expert mental models.

For me learning Go, the most crucial step was not just seeing the difference, but going back and refactoring my own code based on those insights. Each cycle transformed the exercise from a simple task into a kata, a mindful practice where the goal wasn’t just to get a working solution, but to internalize the patterns of an expert. I even gave a presentation about my mistakes and how I learned from them.

This principle extends far beyond Franklin’s era. It is the very essence of modern AI. Large language models don’t “know” anything; they are sophisticated pattern recognition engines. They have learned the statistical patterns of language so well that they can generate coherent, novel text because they have captured the distribution of language itself45.

People who master any field follow the principle. They don’t win by consuming the most tutorials, but have built the most effective systems for recognizing patterns, crafting systems that surface errors quickly, and converting them into new capabilities.

Perhaps Franklin’s greatest insight wasn’t the method itself, but his rejection of the fixed mindset. In an era when talent was considered innate, he proved that expertise could be engineered systematically. This is learning how to learn, the ultimate meta skill.

Three hundred years ago, a teenage printer reverse engineered the algorithm of learning with nothing more than paper and ink. While Franklin’s method and machine learning operate in different domains, they share the same core architecture of systematic improvement through error correction.

Today, we have access to endless “Spectators” in every field imaginable. Franklin gave us the blueprint. It’s time we started building ourselves with it.

If you’re inspired by this method, don’t just admire it, try it.

That’s it. By running a single, conscious loop, you’ll have already started practicing like Franklin and learning like a neural network.

Benjamin Franklin, The Autobiography of Benjamin Franklin (1791). Franklin’s own account of his father’s critique of his writing style. ↩︎

Benjamin Franklin, The Autobiography of Benjamin Franklin (1791). Franklin describes his method: “I compared my Spectator with the original, discovered some of my faults, and corrected them.” ↩︎

Anders Ericsson, Peak: Secrets from the New Science of Expertise (2016). The seminal work on deliberate practice and how experts are made. ↩︎

PNAS, “Learning in deep neural networks and brains with similarity” - Scientific paper on pattern recognition in neural networks and biological brains. ↩︎

OpenAI, “Learning complex goals with iterated amplification” - Research on how AI systems learn and improve through iterative processes. ↩︎